Note: This site is moving to KnowledgeJump.com. Please reset your bookmark.

Evaluating Instructional Design

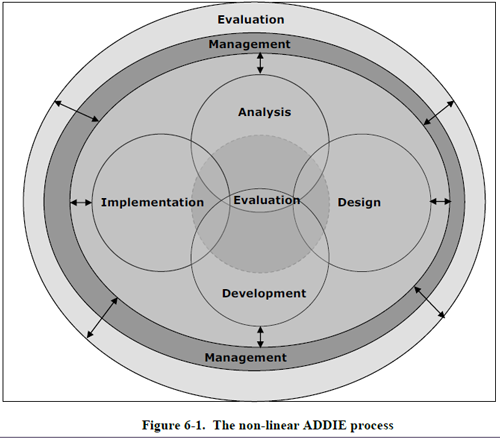

Evaluation is the systematic determination of merit, worth, and significance of a learning or training process by comparing criteria against a set of standards. While the evaluation phase is often listed last in the ISD process, it is actually ongoing throughout the entire process. This is what partially makes ISD or ADDIE a dynamic process rather than just a waterfall or linear process. This dynamic process of evaluation can best be shown with this model (Department of the Army, 2011):

The primary purpose is to ensure that the stated goals of the learning process will actually meet the required business need. Thus, evaluation is performed not only at the end of the process, but also during the first four phases of the ISD process:

- Analysis: Is the performance problem a training problem? How will implementing a learning platform positively affect a business need or goal? What must the learners be able to do in order to ensure the required change in performance?

- Design: What must be learned by the learners that will enable them to fulfill the required business need or goal?

- Development: What activities will best bring about the required performance?

- Implementation: Have the learners now become performers (do they have the skills and knowledge to perform the required tasks)?

- Evaluation: Formative evaluations are performed during the first four ADDIE phases (shown in the inner circle of the above model), while the last phase is normally used for a summative evaluation (shown in the outer circle of the above model).

Evaluations are performed thoughout the entire life-cycle of the project in order to fix defects in the learning or training process, then that means ISD or ADDIE is dynamic, NOT linear (waterfall)!

The two main requirements that must be performed during the evaluation phase are, 1) ensuring the learners can actually meet the new performance standards once they have completed their training and returned to their jobs; 2) ensuring that the business need or goal is actually being met.

The most exciting place in teaching is the gap between what the teacher teaches and what the student learns. This is where the unpredictable transformation takes place, the transformation that means that we are human beings, creating and dividing our world, and not objects, passive and defined. - Alice Reich (1983).

Evaluations help to measure Reich's gap by determining the value and effectiveness of a learning program. It uses assessment and validation tools to provide data for the evaluation. Assessment is the measurement of the practical results of the training in the work and learning environment; while validation determines if the objectives of the training goal were met.

Bramley and Newby (1984) identified five main purposes of evaluation:

- Feedback - Linking learning outcomes to objectives and providing a form of quality control.

- Control - Making links from training to organizational activities and to consider cost effectiveness.

- Research - Determining the relationships between learning, training, and the transfer of training to the job.

- Intervention - The results of the evaluation influence the context in which it is occurring.

- Power games - Manipulating evaluative data for organizational politics (this is bad).

A literature review for the seventeen year period leading up to 1986 suggested that there is a widespread under-evaluation of learning and performing processes, and that what is being done is of uneven quality (Foxon, 1989).

Patel (2010) measured the use of Kirkpatrick's level and discovered that organizations measured the following:

- 90% of companies surveyed measured trainee reactions

- 80% measured student learning

- 50% measured on-the-job behavior

- 40% reported measuring results (the most important measurement)

However, with today's economy and organizations looking to cut programs that do not work, this lackadaisical attitude towards training evaluation is changing, not necessarily towards ROI (Return on Investment), but rather towards ensuring that training supports the stakeholders' needs. This is primarily because stakeholders for the most part do not view training as a profit center, but rather as a strategic partner who supports their goals.

Backwards Planning

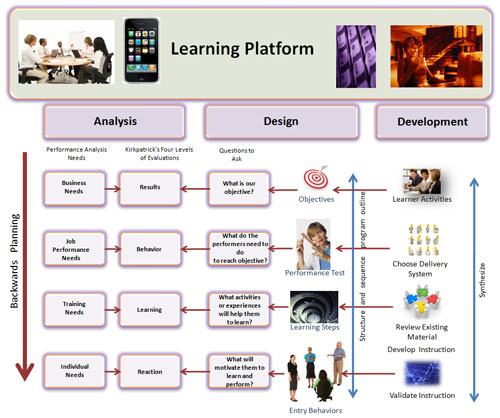

Throughout this ISD guide, we have been using the ADDIE Backwards Planning Model to ensure the learning platform is designed in a manner that achieves the specified Business Need or Objective:

Click for larger image

It is based on Kirkpatrick's Four Levels of Evaluation (1975); however, it starts with the last step and works backwards to achieve the desired goals. Three of the levels, Reaction (motivation), Learning, and Behavior (performance) are measured using formative evaluations, while the last step, Results, are used to judge the overall worth of the learning platform (summative evaluation).

Next Steps

Go to the next section: Formative and Summative Evaluations

Return to the Table of Contents

References

Bramley, P., Newby, A.C. (1984). The Evaluation Of Training Part I: Clarifying The Concept. Journal of European & Industrial Training, 8(6), 10-16.

Department of the Army (2011). Army Learning Policy and Systems. TRADOC Regulation 350-70.

Foxon, M. (1989). Evaluation of training and development programs: A review of the literature. Australian Journal of Educational Technology, 5(2), 89-104.

Kirkpatrick, D.L. (1975). Techniques for Evaluating Training Programs. Evaluating training programs. Kirkpatrick (ed.) Alexandria, VA: ASTD.

Patel, L. (2010). ASTD State of the Industry Report 2010. Alexandria,

VA: American Society for Training & Development.