Note: This site is moving to KnowledgeJump.com. Please reset your bookmark.

Kirkpatrick's Four Level Evaluation Model

Perhaps the best known evaluation methodology for judging learning processes is Donald Kirkpatrick's Four Level Evaluation Model that was first published in a series of articles in 1959 in the Journal of American Society of Training Directors (now known as T+D Magazine). The series was later compiled and published as an article, Techniques for Evaluating Training Programs, in a book Kirkpatrick edited, Evaluating Training Programs (1975).

However it was not until his 1994 book was published, Evaluating Training Programs, that the four levels became popular. Nowadays, his four levels remain a cornerstone in the learning industry.

While most people refer to the four criteria for evaluating learning processes as “levels,” Kirkpatrick never used that term, he normally called them “steps” (Craig, 1996). In addition, he did not call it a model, but used words such as, “techniques for conducting the evaluation” (Craig, 1996).

The four steps of evaluation consist of:

- Step 1: Reaction - How well did the learners like the learning process?

- Step 2: Learning - What did they learn? (the extent to which the learners gain knowledge and skills)

- Step 3: Behavior - (What changes in job performance resulted from the learning process? (capability to perform the newly learned skills while on the job)

- Step 4: Results - What are the tangible results of the learning process in terms of reduced cost, improved quality, increased production, efficiency, etc.?

Kirkpatrick's concept is quite important as it makes an excellent planning, evaluating, and troubling-shooting tool, especially if we make some slight improvements as show below.

Not Just for Training

While some mistakenly assume the four levels are only for training processes, the model can be used for other learning processes. For example, the Human Resource Development (HRD) profession is concerned with not only helping to develop formal learning, such as training, but other forms, such as informal learning, development, and education (Nadler, 1984). Their handbook, edited by one of the founders of HRD, Leonard Nadler (1984), uses Kirkpatrick's four levels as one of their main evaluation models.

Kirkpatrick himself wrote, “These objectives [referring to his article] will be related to in-house classroom programs, one of the most common forms of training. Many of the principles and procedures applies to all kinds of training activities, such as performance review, participation in outside programs, programmed instruction, and the reading of selected books” (Craig, 1996, p294).

Improving the Four Levels

Because of its age and with all the new technology advances, Kirkpatrick's model is often criticized for being too old and simple. Yet, almost five decades after its introduction, there has not been a viable option to replace it. And I believe the reason why is that because Kirkpatrick basically nailed it, but he did get a few things wrong:

Motivation, Not Reaction

When a learner goes through a learning process, such as an e-learning course, informal learning episode, or using a job performance aid, the learner has to make a decision as to whether he or she will pay attention to it. If the goal or task is judged as important and doable, then the learner is normally motivated to engage in it (Markus, Ruvolo, 1990).

However, if the task is presented as low-relevance or there is a low probability of success, then a negative effect is generated and motivation for task engagement is low. In addition, research on reaction evaluations generally show that it is not a valid measurement for success (see the last section, Criticisms).

This differs from Kirkpatrick (1996) who wrote that reaction was how well the learners liked a particular learning process. However, the less relevance the learning package is to a learner, then the more effort that has to be put into the design and presentation of the learning package.

That is, if it is not relevant to the learner, then the learning process has to hook the learner through slick design, humor, games, etc. This is not to say that design, humor, or games are unimportant; however, their use in a learning process should be to promote or aid the learning process rather than just make it fun. And if a learning package is built of sound purpose and design, then it should support the learners in bridging a performance gap. Hence, they should be motivated to learn—if not, something dreadfully went wrong during the planning and design processes! If you find yourself having to hook the learners through slick design, then you probably need to reevaluate the purpose of your learning processes.

Performance, Not Behavior

As Gilbert noted (1998), performance is a better objective than behavior because performance has two aspects: behavior being the means and its consequence being the end . . . and it is the end we are mostly concerned about.

Flipping it into a Better Model

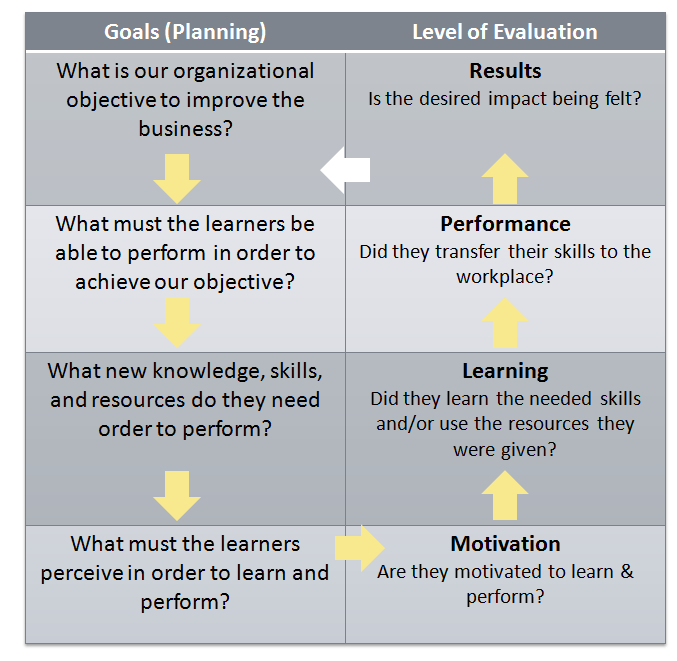

The model is upside down as it places the two most important items last—results, and behavior, which imprints the importance of order in most peoples' mind. Thus, by flipping it upside down and adding the above changes we get:

- Result - What impact (outcome or result) will improve our business?

- Performance - What do the employees have to perform in order to create the desired impact?

- Learning - What knowledge, skills, and resources do they need in order to perform? (courses or classrooms are the LAST answer, see Selecting the Instructional Setting)

- Motivation - What do they need to perceive in order to learn and perform? (Do they see a need for the desired performance?)

This makes it both a planning and evaluation tool that can be used as a troubling-shooting heuristic (Chyung, 2008):

Revised Evaluation Model

The revised model can now be used for planning (left column) and evaluation (right column). In addition, it aids the troubling-shooting process.

For example, if you know the performers learned their skills but do not use them in the work environment, then the two more likely troublesome areas become apparent as they are normally in the cell itself (in this example, the Performance cell) or the cell to the left of it:

- There is a process in the work environment that constrains the performers from using their new skills, or

- the initial premise that the skills selected for training would bring about change is wrong.

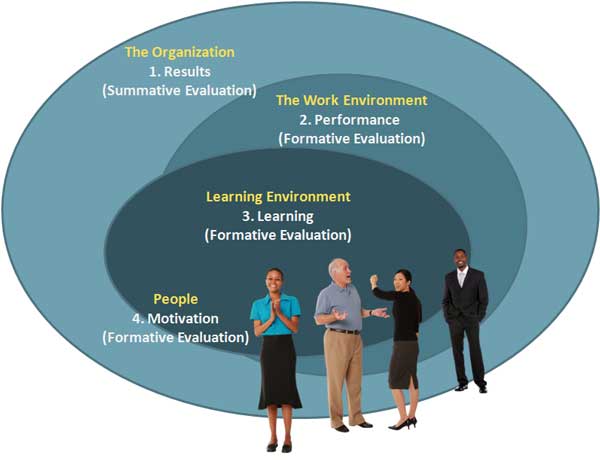

The diagram below shows how the evaluation processes fit together:

Learning and Work Environment

As the above diagram shows, the Results evaluation is of the most interest to the business leaders, while the other three evaluations (performance, learning, and motivation) are essential to the learning designers for planning and evaluating their learning processes; of course the Results evaluation is also important to them as it gives them a goal for improving the business. For more information, see Formative and Summative Evaluations.

Level Four - Results

Results or Impact measures the effectiveness of the initiative. Although it is normally more difficult and time-consuming to perform than the other three levels, it provides information that is of increasingly significant value as it proves the worth of a learning and performance process.

However, using the Revised Evaluation model that is shown above should ease the process, as you will now have a clear picture of what you are trying to achieve. That is, when you plan for something then you more readily understand how to evaluate it.

Motivation, Learning, and Performance are largely soft measurements; however, decision-makers who approve such learning processes prefer results (returns or impacts). Jack Phillips (1996), who probably knows Kirkpatrick's four levels better than anyone else does, writes that the value of information becomes greater as we go from motivation to results.

That does not mean the other three levels are useless, indeed, their benefits are being able to locate problems within the learning package:

- The motivation evaluation informs you how relevant the learning process is to the learners (it measures how well the learning analysis processes worked). You may have all the other levels correct, but if the learners do not see a purpose for learning and performing, then they probably won't perform it.

- The Learning evaluation informs you to the degree of relevance that the learning process worked to transfer the new skills to the learners (it measures how well the design and development processes worked).

- The performance evaluation informs you of the degree that their skills actually transferred to their job (it measures how well the performance analysis process worked).

- The results evaluation informs you of the return the organization receives from supporting the learning process. Decision-makers normally prefer this harder result, although not necessarily in dollars and cents. For example, a study of financial and information technology executives found that they consider both hard and soft returns when it comes to customer-centric technologies, but give more weight to non-financial metrics (soft), such as customer satisfaction and loyalty (Hayes, 2003).

Note the difference in “information” and “returns.” Motivation, Learning, and Result measurements give you information for improving and evaluating the learning process, which mostly concerns the learning designers; while the Results measurement gives you the returns for investing in the learning process, which mostly concerns the business leaders.

This Results measurement of a learning process might be met with a more balanced approach or a balanced scorecard (Kaplan, Norton, 2001), which looks at the impact or return from four perspectives:

- Financial: A measurement, such as an ROI, that shows a monetary return, or the impact itself, such as how the output is affected. Financial can be either soft or hard results.

- Customer: Improving an area in which the organization differentiates itself from competitors to attract, retain, and deepen relationships with its targeted customers.

- Internal: Achieve excellence by improving such processes as supply-chain management, production process, or support process.

- Innovation and Learning: Ensuring the learning package supports a climate for organizational change, innovation, and the growth of individuals.

Evaluating Impact

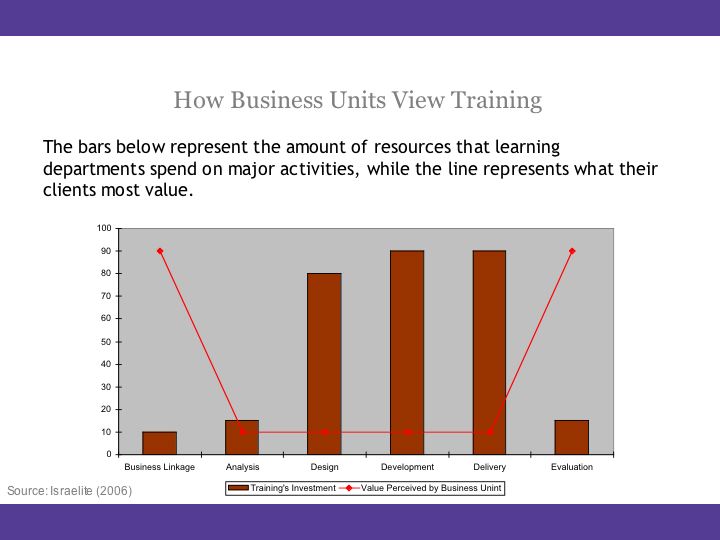

Showing the impact of learning is important as it allows the customer to know exactly how the learning process will bring positive results to the business. However, while the business units value the business linkage (impact or outcome) and evaluation (measurement) the most, learning departments often spend the least amount of time and resources on these two activities:

Level Three - Performance

This evaluation involves testing the learner's capabilities to perform learned skills while on the job. These evaluations can be performed formally (testing) or informally (observation). It determines if the correct performance is now occurring by answering the question, “Do people use their newly acquired skills on the job?”

It is important to measure performance because the primary purpose of learning in the organization is to improve results by having its people learn new skills and knowledge and then actually applying them to the job. Since performance measurements must take place when they are doing their work, the measurement will typically involve someone closely involved with the learner, such as a supervisor or a trained observer or interviewer.

Level Two - Learning

This is the extent to which learners improve knowledge, increase skill, and change attitudes as a result of participating in a learning process. The learning evaluation normally requires some type of post-testing to ascertain what skills were learned during the process and what skills they already had.

Measuring the learning that takes place is important in order to validate the learning objectives. Evaluating the learning that has taken place typically focuses on such questions as:

- What knowledge was acquired?

- What skills were developed or enhanced?

- What attitudes were changed?

Learner assessments are created to allow a judgment to be made about the learner's capability for performance. There are two parts to this process: the gathering of information or evidence (testing the learner) and the judging of the information (what does the data represent?). This assessment should not be confused with evaluation. Assessment is about the progress and achievements of the individual learners, while evaluation is about the learning program as a whole (Tovey, 1997).

Level One - Motivation

Assessment at this level measures how the learners perceive and react to the learning and performance process. This level is often measured with attitude questionnaires that are passed out after most training classes. Learners are often keenly aware of what they need to know to accomplish a task. If the learning process fails to satisfy their needs, a determination should be made as to whether it's the fault of the learning process design or the learners do not perceive the true benefits of the process.

When a learning process is first presented, rather it be eLearning, mLearning, classroom training, a job performance aid, or through a social media tool, the learner has to make a decision as to whether he or she will pay attention to it. If the goal or task is judged as important and doable, then the learner is normally motivated to engage in it (Markus, Ruvolo, 1990). However, if the task is presented as low-relevance or there is a low probability of success, then a negative effect is generated and motivation for task engagement is low.

Criticisms

There are three problematic assumptions of the Kirkpatrick model: 1) the levels are not arranged in ascending order, 2) the levels are not causally linked, and 3) the levels are positively inter-correlated (Alliger and Janak, 1989).

The only part of Kirkpatrick's four levels that has failed to uphold to scrutiny over time is Reaction. For example, a Century 21 trainer with some of the lowest Level one scores was responsible for the highest performance outcomes post-training (level four), as measured by his graduates' productivity. This is not just an isolated incident—in study after study the evidence shows very little correlation between Reaction evaluations and how well people actually perform when they return to their job (Boehle, 2006).

Rather than measuring reaction, what we are now discovering is that we should be pre-framing the learners by having their managers or informal leaders discuss the importance of participating in a learning process (on-ramping) and then following-up on them to ensure they are using their new skills (Wick et al., 2006), hence another reason for changing the term “reaction” to “motivation.”

Kirkpatrick's four levels treat evaluation as an end of the process activity, whereas the objective should be to treat evaluation as an ongoing activity that should begin during the pre-learning phase.

Actually, this criticism is inaccurate. For example, The ASTD Training & Development Handbook (1996), edited by Robert Craig, includes a chapter by Kirkpatrick with the simple title of “Evaluation.” In the chapter, Kirkpatrick discusses control groups before and after the training (such as pre and post-tests).

He goes on to discuss that level four should also include a post-training appraisal three or more months after the learning process to ensure the learners put into practice what they have learned.

Kirkpatrick further notes that he believes the evaluations should be included throughout the learning process by getting evaluations not only during each session or module, but also after each subject or topic.

The four Levels are only for training process, rather than other forms of learning.

As noted in the second section, Not Just for Training, Kirkpatrick wrote about being able to use the four levels in other types of learning processes and the Human Resource Development profession who help to deliver both informal and formal learning use Kirkpatrick's four levels as one of their main evaluation models. Perhaps the real reason that informal learning advocates do not see the model being useful is because “it was not invented here.”

The four levels of evaluations mean very little to the other business units

One of the best training and development books is The Six Disciplines of Breakthrough Learning by Wick, Pollock, Jefferson, Flanagan (2006). They offer perhaps the best criticism that I have seen:

“Unfortunately, it is not a construct widely shared by business leaders, who are principally concerned with learning's business impact. Thus, when learning leaders write and speak in terms of levels of evaluation to their business colleagues, it reflects a learning-centric perspective that tends to confuse rather than clarify issues and contribute to the lack of understanding between business and learning functions.”

So it might turn out that the best criticism is not leveled at the four levels themselves, but rather the way we use them when speaking to other business leaders. We tell the business units that the level-one evaluations show the learners were happy and that level two show they all passed the test with flying colors, and so on up the line. Yet according to the surveys that I have seen, results or impact is rarely used, which the business leaders most highly value.

The other levels of evaluation can be quite useful within the design process as they help us to discuss what type of evaluation we are speaking about and pinpoint troubled areas. However, outside of the learning and development department they often fall flat. For the most part, the business leaders' main concern is the IMPACT—did the resources we spent on the learning process contribute to the overall health and prosperity of the enterprise?

Next Steps

Return to the ISD Table of Contents or main Training Page

Pages in the Evaluation Phase

- Introduction

- Formative and Summative Evaluations

- Kirkpatrick's Four Levels of Evaluation

References

Alliger, G.M., Janak, E.A. (1989). Kirkpatrick's levels of training criteria: Thirty years later. Personnel Psychology, 42(2):331–342.

Boehle, S. (2006). Are You Too Nice to Train?. Training Magazine. Retrieved from: http://www.trainingmag.com/msg/content_display/training/e3iwtqVX4kKzJL%2BEcpyFJFrFA%3D%3D?imw=Y

Chyung, S.Y. (2008). Foundations of Instructional Performance Technology. Amherst, MA: HRD Press Inc.

Craig, R.L. (1996). The ASTD Training: Development Handbook. New York: McGraw-Hill, p294.

Gilbert, T. (1998). A Leisurely Look at Worthy Performance. Woods, Gortada (eds). The 1998 ASTD Training and Performance Yearbook. New York: McGraw-Hill.

Hayes, M. (2003). Just Who's Talking ROI? Information Week. Feb. 2003, p18.

Kaplan, R.S., Norton, D.P. (2001). The Strategy-Focused Organization: How Balanced Scorecard Companies Thrive in the New Business Environment. Boston, MA: Harvard Business School Press.

Kirkpatrick D.L. (1959). Techniques for evaluating training programs. Journal of American Society of Training Directors. 13(3): pp21–26.

Kirkpatrick, D.L. (1975). Techniques for Evaluating Training Programs. Kirkpatrick (ed.). Evaluating training programs. Alexandria, VA: ASTD.

Kirkpatrick, D.L. (1994). Evaluating Training Programs. San Francisco: Berrett-Koehler Publishers, Inc.

Markus, H., Ruvolo, A. (1990). Possible selves: Personalized representations of goals. Pervin (ed). Goal Concepts in Psychology. Hillsdale, NJ: Lawrence Erlbaum. pp 211–241.

Nadler, L. (1984). The Handbook Of Human Resource Development. New York: John Wiley & Sons.

Phillips, J. (1996). Measuring the Results of Training. The ASTD Training and Development Handbook. Craig, R. (ed.). New York: McGraw-Hill.

Tovey, M. (1997). Training in Australia. Sydney: Prentice Hall Australia, p88. (Note: this is perhaps one of the best books on the ISD or ADDIE process)

Wick, C.W., Pollock, R.V.H., Jefferson, A.K., Flanagan, R.D. (2006). The Six Disciplines of Breakthrough Learning. San Francisco, CA: Pfeiffer.